Warning

You are currently viewing v2.15 of the documentation and it is not the latest. For the most recent documentation, kindly click here.

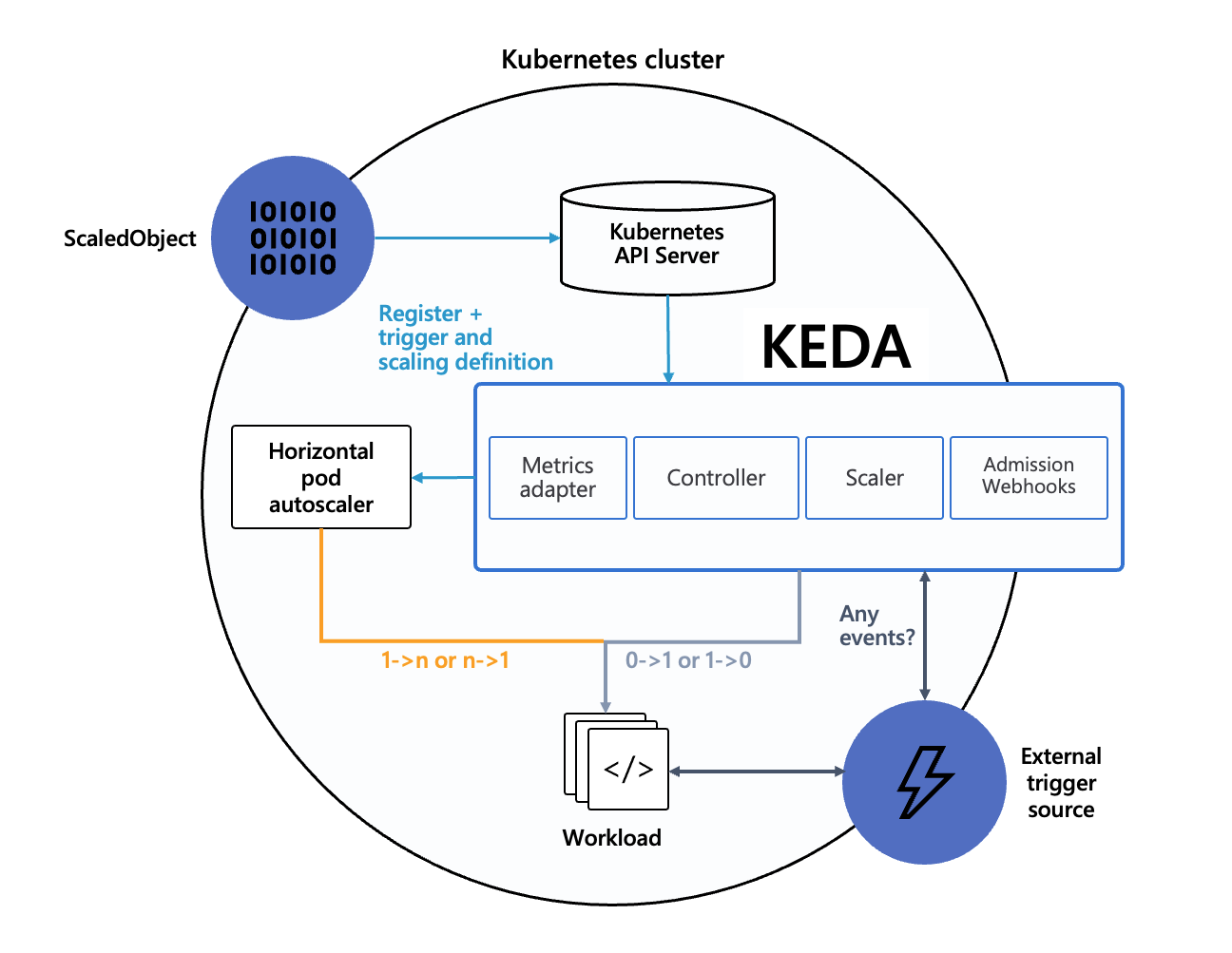

KEDA is a Kubernetes-based Event Driven Autoscaler. With KEDA, you can drive the scaling of any container in Kubernetes based on the number of events needing to be processed.

KEDA is a single-purpose and lightweight component that can be added into any Kubernetes cluster. KEDA works alongside standard Kubernetes components like the Horizontal Pod Autoscaler and can extend functionality without overwriting or duplication. With KEDA you can explicitly map the apps you want to use event-driven scale, with other apps continuing to function. This makes KEDA a flexible and safe option to run alongside any number of any other Kubernetes applications or frameworks.

KEDA performs three key roles within Kubernetes:

keda-operator container that runs when you install KEDA.keda-operator-metrics-apiserver container that runs when you install KEDA.The diagram below shows how KEDA works in conjunction with the Kubernetes Horizontal Pod Autoscaler, external event sources, and Kubernetes’ etcd data store:

KEDA has a wide range of scalers that can both detect if a deployment should be activated or deactivated, and feed custom metrics for a specific event source. The following scalers are available:

When you install KEDA, it creates four custom resources:

scaledobjects.keda.shscaledjobs.keda.shtriggerauthentications.keda.shclustertriggerauthentications.keda.shThese custom resources enable you to map an event source (and the authentication to that event source) to a Deployment, StatefulSet, Custom Resource or Job for scaling.

ScaledObjects represent the desired mapping between an event source (e.g. Rabbit MQ) and the Kubernetes Deployment, StatefulSet or any Custom Resource that defines /scale subresource.ScaledJobs represent the mapping between event source and Kubernetes Job.ScaledObject/ScaledJob may also reference a TriggerAuthentication or ClusterTriggerAuthentication which contains the authentication configuration or secrets to monitor the event source.See the Deployment documentation for instructions on how to deploy KEDA into any cluster using tools like Helm.